TLDR

From 2026 onwards, audits will no longer ask whether you use AI. What matters is whether you can provide an auditable trace for every AI decision. Establishing clear responsibilities, robust data contracts, and documented data flows protects margins, reputation, and licenses.

Why explainability is becoming the litmus test for AI governance

Audits are shifting from a technology question to a question of accountability. An auditor doesn’t say: Use AI. They say: Show me what data supported this decision and under what rules it was made.

Many companies have source systems, features, and rule sets. What’s missing is end-to-end traceability. As soon as legal or internal audit demands the trace, every AI decision becomes a black box.

Governance solves precisely this problem. It creates clarity about who stands for what, the quality of data, and how decisions are documented. This makes explainability not an afterthought, but a built-in principle.

Business risks without explanation

- Margin risk

, incorrect decisions, or unclear liability lead to corrections, contractual penalties, or provisions. - Reputational risk:

Unexplainable AI decisions damage the trust of customers, supervisors, and employees. - Licensing risks:

Industry regulators and internal auditors demand proof of data origin, rules, and approvals. Without traceability, restrictions and even the suspension of critical use cases are possible.

Three levers that work immediately

- Define responsibilities along the decision-making flow.

Every AI decision requires a subject matter expert owner as well as defined roles for data, product, and risk. IT supports the implementation, but the subject matter expert responsibility lies with the business. - Define data contracts and control points.

Specify what is allowed in a feature, its required quality, and the necessary documentation. This includes validation rules, data quality metrics, and versioning of features and rule sets. - Ensuring central transparency and escalation:

One point of contact and the goal of 48 hours for a complete trace. This requires a register for models and rules, including approvals, monitoring, and an audit log.

This is what an auditable decision trace looks like.

- Data origin Source Timestamp Permissions Purpose limitation

- Feature Documentation Description Transformation Quality Indicators Version

- Rule and model version training data period release date responsible persons

- Runtime context environment parameters input output deviations

- Controls and approvals Four-eyes review Risk assessment Change history

This structure allows for targeted answers to audit questions. Was the decision based on admissible data? Was the correct rule applied? Who gave the go-ahead and when? What level of quality was demonstrated at the time of the decision?

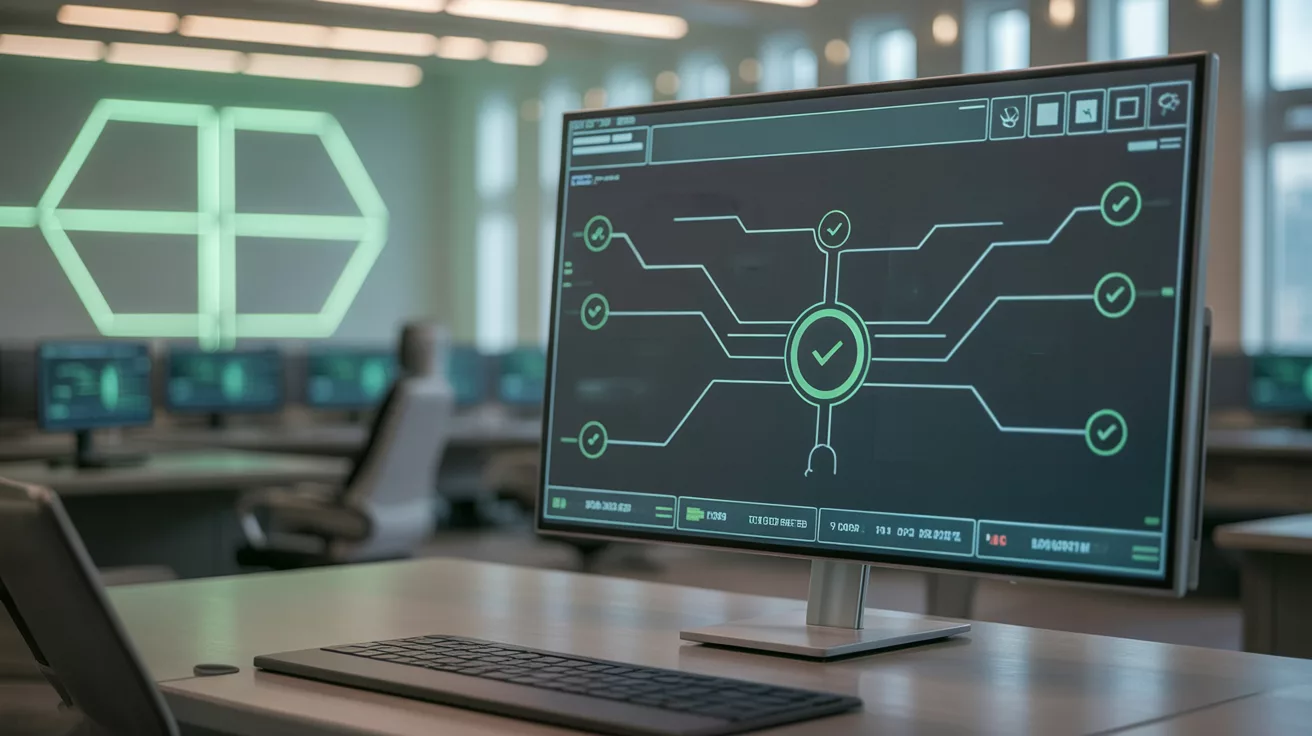

Operating Model for AI Governance

- Policies: Principles for data use, fairness, security and explainability.

- Processes: Approvals before commissioning, changes via Change Control Decommissioning.

- Rollen Product Owner Data Owner Model Owner Risk Officer Internal Audit.

- Artifacts , Model Register, Data Catalog, Feature Store, Rule Library, Audit Log.

- Metrics: Data quality, model drift, explainability, trace coverage, time to complete trace.

Technology follows structure

Tools for data catalog feature management and monitoring are helpful. They only become effective when responsibilities are clear, data contracts are actively implemented, and trace goals are binding. Structure comes first, then technology.

Status check in two minutes

- Could you provide a full decision trace within 48 hours if Legal calls?

- If so, where is the bottleneck with a growing number of models?

- If not, there is a lack of ownership of data contracts or monitoring.

Timetable for the next 90 days

- Create transparency,

set up a model and rule register, and record the baseline of the most important use cases. - Define the minimum trace standard, specifying

mandatory fields for origin, feature, version, approvals, and logs. Target time: 48 hours. - Pilot and scale:

Elevate a relevant use case to the new standard, document lessons learned, and roll out to other cases. - Incorporate governance,

responsibilities and KPIs into target systems, and audit readiness every six months.

MINI example

Decision: Credit limit adjustment on September 12th, 10:14 AM

Sources: CRM v23.4 (customer), ERP v12.2 (receivables), credit agency v5.1

Rules: “KYC valid”, “Balance < 60 days overdue”

Feature/Model: Feature set v7.3, model “CreditRisk_2025Q3” v1.12, trained 2025-08-28

Result: Score 0.72 → Limit +10%

Owner: Fach-Owner Finance Risk; Tech-Owner Data Platform

FAQ on AI explainability and governance

Do I need complex tools to become auditable?

Not necessarily. Start with clearly defined roles, binding data agreements, and a clean register. Tools only accelerate the process once the structure is in place.

Post hoc explainability of models is

only partially sufficient. It supports interpretation but does not replace evidence regarding data origin, rules, and approvals.

How do I measure progress?

Time to complete trace coverage of mandatory fields and number of decisions with documented approval are three robust key performance indicators.

Conclusion

Explainability is not optional. It is the foundation of governance for AI decisions. Those who establish responsibilities, data contracts, and documentation today will pass every audit tomorrow and protect their margins, reputation, and licenses.

Do you want to pass your audits from now on?

Dategro partners with mid-sized industrial companies to transform disconnected commercial data into unified performance dashboards—without replacing core systems or creating IT headaches.